As edge devices demand faster, more efficient, and privacy-preserving AI processing, traditional digital hardware is hitting its limits. GPUs and CPUs operate with high energy consumption, struggle with real-time learning, and rely heavily on cloud connectivity. In contrast, memristor-based neuromorphic chips represent a fundamental shift: they combine memory and computation into a single unit, just like the human brain. This breakthrough enables massively parallel, low-power, low-latency AI that can run directly on edge devices without cloud dependency.

Memristors: A Foundation for Neuromorphic Computing

A memristor (memory-resistor) is a two-terminal device whose resistance can be modulated and remembered even when power is removed. Its synapse-like behavior makes it ideal for neuromorphic systems.

Why Memristors Matter

- Non-volatile memory preserves data without constant energy.

- Analog resistive states allow multiple weight levels for neural networks.

- High density enables large crossbar arrays for matrix computations.

- In-memory computing reduces data transfer bottlenecks (Von Neumann bottleneck).

These features allow memristors to act as artificial synapses, enabling brain-inspired computing where memory and computation happen at the same physical location.

Neuromorphic System Architecture

Neuromorphic systems are designed to replicate how biological neural networks process information. A typical architecture includes:

- Neuron circuits

- Synapse arrays (memristor crossbars)

- Neural cores or tiles

- Event-driven communication networks

- Low-power analog or mixed-signal pathways

Memristors allow neural operations such as accumulate-and-fire, vector-matrix multiplication, and learning rules to occur natively in hardware. This leads to edge devices with near-instant decision-making.

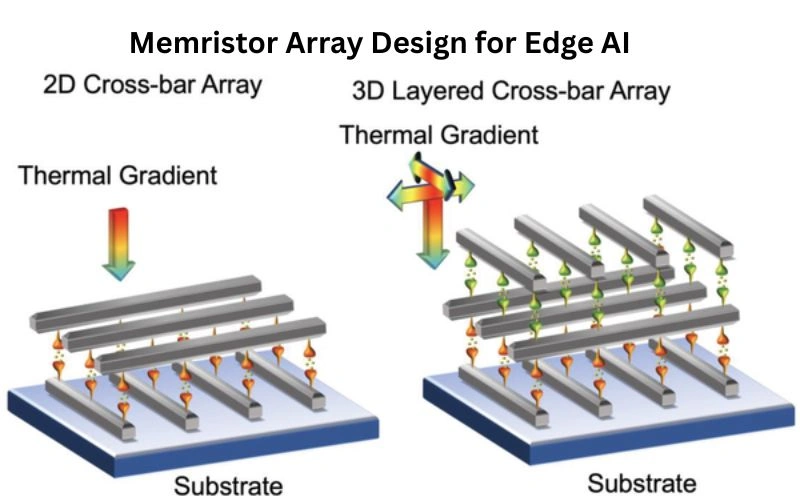

Memristor Array Design for Edge AI

Memristor arrays, especially crossbar arrays, serve as the core computational blocks.

Benefits of Memristor Arrays

- Compute matrix multiplication in O(1) time, regardless of array size.

- Perform multiply-accumulate (MAC) operations using Ohm’s and Kirchhoff’s laws.

- Enable parallelism at a scale impossible for digital processors.

For edge AI applications such as image processing, voice recognition, anomaly detection, and sensor fusion, this architecture drastically reduces latency and energy consumption.

Integrated Memristor Chip Technology

A fully integrated memristive chip combines:

- Memristor crossbars

- Analog-to-digital interfaces

- Neuron circuits

- Control processors

- Storage and communication modules

- On-chip training engines

By integrating all elements on a single chip, developers achieve:

- Faster data flow

- Lower power consumption

- Ultra-compact form factor

- Enhanced reliability

In 2025, research prototypes have demonstrated remarkable efficiency gains, surpassing many standard digital accelerators used in edge devices.

Algorithms Optimized for Memristor-Based Edge Systems

Memristor-based neuromorphic chips require algorithms that support:

- Analog weight updates

- Sparse neural activity

- Event-driven learning

- Noise-tolerant operations

- In-situ training (weights updated on-chip)

Popular algorithmic approaches include:

- Spike-Timing-Dependent Plasticity (STDP)

- Hebbian learning

- Binary and multi-level neural networks

- In-memory backpropagation variants

- Reservoir computing

These methods leverage the natural behavior of memristors to achieve learning with minimal energy.

Methodology and On-Chip Training Approaches

1. On-Device Training

Memristors allow the network’s weights to be updated directly within the hardware array. This eliminates the need to transfer data between memory and processors.

2. Hybrid Analog-Digital Training

Algorithms run partially in the digital domain but update weights via analog signals.

3. Incremental Learning

Instead of full retraining, edge devices continuously refine models using local data—vital for wearables, sensors, and robotics.

Why Memristor-Based Hardware is Transforming Edge AI in 2025

2025 marks a turning point due to rising demand for:

- Low-power inference

- Privacy-preserving AI

- Real-time learning

- On-site data processing

Memristors directly address these needs, offering 10–100x better energy efficiency compared to CMOS-only AI accelerators.

Advantages Over CMOS and GPU-Based Inference

1. Power Consumption

Memristor neuromorphic chips consume microwatts to milliwatts—ideal for IoT.

2. Latency

In-memory computation eliminates external memory calls.

3. Scalability

Crossbar arrays can pack millions of synapses in ultra-small footprints.

4. On-Device Learning

Most GPUs are inference-only; memristors support both inference and training.

Key Challenges: Variability, Endurance, and Fabrication Scaling

Despite progress, challenges remain:

- Device variability affects accuracy.

- Endurance limits impact long-term reliability.

- Fabrication complexity requires advanced materials.

- Noise and drift must be compensated through algorithmic correction.

Ongoing research in materials engineering and circuit-level compensation is steadily improving performance.

Applications: IoT Devices, Smart Sensors, Wearables & Robotics

Memristor-based edge AI is especially useful where power and size are critical:

- Smart home sensors

- Mobile and wearable health monitors

- Autonomous drones

- Industrial IoT

- Smart cameras and vision sensors

- Assistive robotics

- Environmental monitoring

- Smart agriculture devices

Real-time decision-making without cloud dependence makes these chips indispensable.

On-Device Learning vs Cloud-Based AI: A Performance Comparison

| Feature | Cloud-Based AI | Memristor Edge AI |

| Latency | High | Ultra-low |

| Privacy | Risky | Secure |

| Power Use | High | Very Low |

| Offline Functionality | Limited | Full |

| Cost | Higher | Lower long-term |

Power Efficiency and Latency Metrics

Memristor chips achieve:

- <1 mW power usage in inference

- Microsecond-level latency

- High throughput via analog compute

- Large synaptic densities in small footprints

These metrics surpass traditional accelerators, making them ideal for compact, mobile devices.

Security Benefits of On-Chip Learning

Because data never leaves the device:

- Privacy is enhanced

- Attack surfaces are reduced

- Model parameters are harder to extract

- Local adaptation improves device resilience

This is crucial for medical wearables, cameras, and industrial IoT.

Market Outlook and Research Trends for 2025–2030

Analysts expect the neuromorphic market to grow sharply as edge AI expands. Key trends:

- Hybrid digital-analog neuromorphic SoCs

- Large-scale memristive crossbar integration

- On-chip continual learning models

- AI-powered sensors with built-in memristor arrays

By 2030, memristor-based chips could become standard in many consumer and industrial devices, similar to how Li-Fi technology and 6G terahertz communications are emerging in connectivity solutions.

Conclusion and Future Perspective

Memristor-based neuromorphic chips represent one of the most transformative technologies for edge AI. Their ability to combine memory and computation, mimic synapses, support in-memory training, and deliver ultra-efficient performance makes them ideal for the next generation of smart, autonomous, and secure devices.As we continue to explore emerging technologies like drone-based communication networks, tactile internet for healthcare, and programmable metasurfaces, memristor-based neuromorphic computing will play a crucial role in enabling these innovations to function efficiently at the edge.